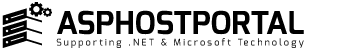

An application delivery controller (ADC), commonly referred to as a load balancer, is a software, hardware, or cloud service that divides up a lot of incoming data from users and apps among a small number of applications servers and services. A load balancer’s goals are to enable efficient use of network and application server resources, provide high availability by using backup application resources in case the primary one is unavailable, and shorten the time it takes to respond to user requests for high performance and low latency. It serves as a traffic director in front of your apps, distributing client requests among all application servers that can process them in a way that optimizes throughput and speed. This prevents overwork on any one server, which could lead to a decrease in performance. The load balancer switches traffic to alternative application servers in case one of the application server instances goes down. The load balancer automatically incorporates the new application instances into its distribution algorithm when a new application server instance is added to the server group.

The Load Balancing Concept

Load balancing, sometimes referred to as server farming or server pools, is the process of effectively allocating incoming network traffic among a collection of application servers. By reducing the load on individual application server instances, this process improves the efficiency of the application servers, resulting in faster performance and shorter response times for users. The majority of web-facing applications require load balancing in order to operate correctly. A better user experience is achieved by significantly reducing user wait times by splitting up user requests among several servers.

In order to improve performance, availability, and scalability, load balancing distributes workloads among several computing resources, such as servers, virtual machines, or containers. This ensures that no single resource is overloaded with traffic. Load balancing can be applied at different layers in cloud computing, such as the network, application, and database layers.

Load balancers in cloud-based deployments can also lower operating costs and increase application scalability by automatically adding and removing application servers based on the volume of requests being handled.

How Load Balancers Work

The Function of Load Balancers in Application Delivery and Cybersecurity

Because they offer protection against malicious attacks against networks and applications, load balancers are essential to cybersecurity. SYN floods, DDoS attacks at the application and network levels, and assaults on web-facing applications are all prevented by it. If the load balancers detect malicious attempts to flood applications with spurious requests, attempt to consume connection and CPU resources, or if a server becomes vulnerable due to the volume of malicious requests, DDoS traffic may be rerouted to a dedicated DDoS protection appliance or service.

In addition, a lot of load balancers have web application and API protection (WAF and WAAP) built in. They can also scan user requests for attempts to send malicious data to applications.

In addition to minimizing the application attack surface, load balancers also remove single points of failure and stop resource exhaustion and link saturation.

As the main point of contact for users and clients in application delivery, load balancers divide requests among several targets in various availability zones and regions. This feature, which is also known as server and global server load balancing, improves fault tolerance and application availability while aiding in disaster recovery.

Adding “listeners” to the load balancer allows you to control the distribution of traffic. These listeners forward requests to a target group of registered applications after being configured with particular protocols and ports. The relevant target group of applications receives traffic when a listener’s rule is satisfied. For various request kinds, load balancers can establish multiple target groups. They can also establish multiple rules to match user request content to those target application groups.

Requests are routed to healthy targets by the load balancer, which keeps an eye on the condition of each registered application server in an availability zone that has been enabled. You need to add a listener to your load balancer before you can use it. The load balancer routes requests to registered targets based on the content rules for your listeners.

Numerous protocols and ports are supported by listeners. Your load balancer can handle TLS/SSL encryption and decryption on behalf of an HTTPS listener, freeing up your application servers to concentrate on their business logic. You need to install at least one SSL server certificate on the listener if the protocol used by the listener is HTTPS. Listeners distribute client connection requests to multiple targets and monitor the application for connection requests from clients. This improves application availability, performance, and reliability.

The Value and Advantages of Load Balancing

Because load balancing involves routine health checks between the load balancer and the host machines to make sure they are receiving client requests, load balancing is crucial. The load balancer diverts the client request to other accessible devices in the event that one of the host computers is unavailable. In addition, load balancers eliminate problematic servers from the pool until the problem is fixed.

Reduced downtime, scalability, redundancy, flexibility, and application efficiency are some advantages of load balancing. By distributing workloads among several application resources, it helps to enhance end-to-end traffic performance by lowering the strain on individual application resources. In order to handle application server failures and provide high availability and fault tolerance, load balancing makes sure that there isn’t a single point of failure in the system. Additionally, it makes it simpler to scale resources up or down in response to demand fluctuations or spikes in user traffic. Additionally, load balancing makes sure that resources are used effectively, which lowers waste and aids in cost optimization.

Types of Load Balancing

Several techniques can be used for load balancing, including:

Software Load Balancing:

Virtual load balancers, also known as software load balancers, function similarly to hardware load balancers but don’t need a specific physical device. Applications installed on application servers or provided as native and/or managed cloud services are known as software load balancers. Utilizing application-level features such as the IP address and HTTP header, they route network traffic to various application servers and listeners according to rules that match the request’s contents.

Hardware Load Balancing:

High performance is hardware load balancing’s main objective. Physical devices called hardware load balancers are used to split up network traffic among several application servers. High performance is made possible by hardware load balancers, which accelerate request and response processing by using specialized device drivers, hardware decryption, encryption hardware, and dedicated CPUs.

Network vs. Application Load Balancing:

Network load balancers, or NLBs, are high-performance devices that handle millions of requests per second with extremely low latency. They function at the transport layer, or Layer 4 of the OSI model. They work best when used for TCP traffic load balancing.

Conversely, application load balancers, or ALBs, function at the application layer. They offer sophisticated features that enable traffic routing based on advanced traffic routing rules and content-based routing rules, and they are designed to balance HTTP and HTTPS traffic.

Use-cases for Network-Level Load Balancing:

Load balancing at the network level comes in handy when handling a lot of incoming TCP requests.

Use-cases for Application-Level Load Balancing:

Incoming application traffic should be split among several application servers when using application-level load balancing.

Load Balancing Algorithms

There are three primary categories of load balancing algorithms:

Round-Robin Technique:

One of the simplest ways to distribute client requests among several servers is to use the Round-Robin technique. The load balancer sends a client request to each server in turn as it moves down the list of servers in the group. The load balancer loops back and continues down the list after it reaches the end. Round-robin load balancing’s primary advantage is its incredibly easy implementation.

Weighted Round-Robin:

An advanced load balancing configuration is Weighted Round-Robin. Similar to basic Round-Robin, this technique lets you point records to multiple IP addresses, but it also adds flexibility by allowing you to distribute weight according to your domain’s needs. A larger portion of the incoming requests are sent to the servers with higher weights.

IP Hash:

IP Hash is an advanced load balancing method that uses a particular class of algorithm called a hashing algorithm. This algorithm is essential for controlling network traffic and guaranteeing that the load on the network is distributed evenly among several servers. As soon as a data packet enters the network, the process starts.

Least Connections Method:

The Least Connections technique, which assigns each new user request to the server with the fewest active connections, is a clever way to distribute the workload among servers. This dynamic modification enhances the network’s overall performance and dependability while guaranteeing effective resource utilization.

Adaptive Techniques for Improved Efficiency:

The load balancer retrieves status indicators from the application servers, and adaptive load balancing uses those indicators to make decisions. Every server has an agent that determines the status. After routinely requesting this status data from each server, the load balancer appropriately sets a dynamic weight for each server.

The Benefits of Load Balancing

Load balancing offers many advantages, such as increased scalability, dependability, and performance:

Scalability:

Especially in cloud deployments where licensing is pay-as-you-go (PAYG), load balancing makes it simpler to scale application resources up or down as needed, helping to handle traffic spikes or changes in demand and thereby saving on costs.

Improved Performance:

By dividing up the workload among several resources, load balancing lessens the strain on each application resource and enhances system performance as a whole.

Application Delivery:

A load balancer acts as a client’s single point of contact in the context of application delivery. To improve the availability of your application, it divides incoming application traffic among several application instances that are deployed as hardware, software, or cloud service instances in various “availability zones” and regions.

Cybersecurity:

In terms of cybersecurity, load balancers shield applications from malicious requests and provide an additional line of defense against DDoS attacks on the network and applications. In addition to reducing the attack surface, load balancers can help eliminate single points of failure and increase the difficulty of depleting resources and overloading links.

Reliability:

By preventing a single point of failure within the system, load balancing offers fault tolerance and high availability to manage application server failures.

The Security Risks Associated with Load Balancing

Although load balancing is necessary for allocating network traffic and guaranteeing peak performance, it can also present a number of security risks, such as:

Single Point of Failure:

Load balancing can become a single point of failure if it is not implemented correctly, even though it helps to lower the risk of one. Load balancers must be deployed in an active-passive, active-active, or cluster configuration to avoid single points of failure.

Security Risks:

Load balancing can create security risks if it is not done correctly, like exposing sensitive data or permitting unwanted access. Administrator privileges must be tightly restricted, patches must be applied on a regular basis, and default passwords for administration must be changed to prevent unwanted access. In order to further improve security and guard against granting too many permissions, many load balancers offer distinct user, administrator, and viewer roles.

Vulnerabilities:

Vulnerabilities may exist in the load balancer’s configuration, usage, or hardware itself. Enforce patching and monitor announcements of Common Vulnerabilities and Exposures (CVEs).

Optimizing Application Delivery with Load Balancing

Load balancing is frequently used for web-facing applications to split up user and application requests among multiple application servers. As a result, each application server experiences less stress and becomes more effective, improving performance and lowering user latency. User wait times are significantly reduced and application server resources are used more effectively when a large number of requests are split among fewer application server instances. This improves user experience and lowers application delivery costs.

Load balancing ensures that resources are used effectively and that there is no single point of failure, which helps to improve the overall performance and reliability of applications. In addition, it offers high availability and fault tolerance to manage traffic spikes and application server failures while assisting with on-demand application scaling.

By distributing requests to servers according to a predetermined distribution algorithm, load balancers enhance application performance by speeding up response times and enhancing user experience. It ensures optimal server utilization, avoids single-point failure, and enables processing of more user requests with fewer application servers—all of which save money.

Load balancing relieves pressure on overworked servers and guarantees optimal processing, high availability, and dependability by forwarding the requests to available application servers. In instances of high or low demand, load balancers dynamically add or remove servers. In this way, it lowers operating costs and offers flexibility in responding to demand.

How to Select the Best Load Balancer for Your Company

Selecting the best load balancer for your company is an important choice that can have a big impact on security and performance. The primary elements to take into account when selecting a load balancer are:

Evaluate criticality and security requirements

Think about the significance of the apps you’re balancing and the security requirements they have.

Seek flexibility and scalability

Seek for solutions that are simple to scale up or down in response to your evolving requirements.

Identify immediate and long-term needs

Recognize your needs now and plan ahead for future requirements.

Compare price points and features

Conduct a cost analysis that takes operating expenses (OpEx) and capital expenditures (CapEx) into account.

Assess traffic type and volume

Analyze the kind and volume of customers that your company serves.

Conclusion

In order to meet a variety of business needs, ASPHostPortal offers sophisticated and all-inclusive load balancing solutions as well as application delivery capabilities to guarantee the best possible service levels for applications in virtual, cloud, and software-defined data centers.

Our fully featured hosting already includes

- Easy setup

- 24/7/365 technical support

- Top level speed and security

- Super cache server performance to increase your website speed

- Top 9 data centers across the world that you can choose.

Andriy Kravets is writer and experience .NET developer and like .NET for regular development. He likes to build cross-platform libraries/software with .NET.