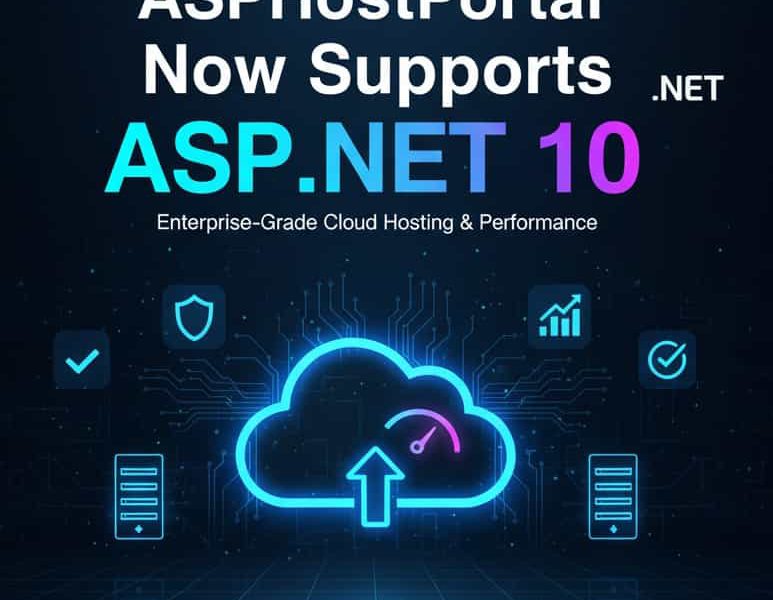

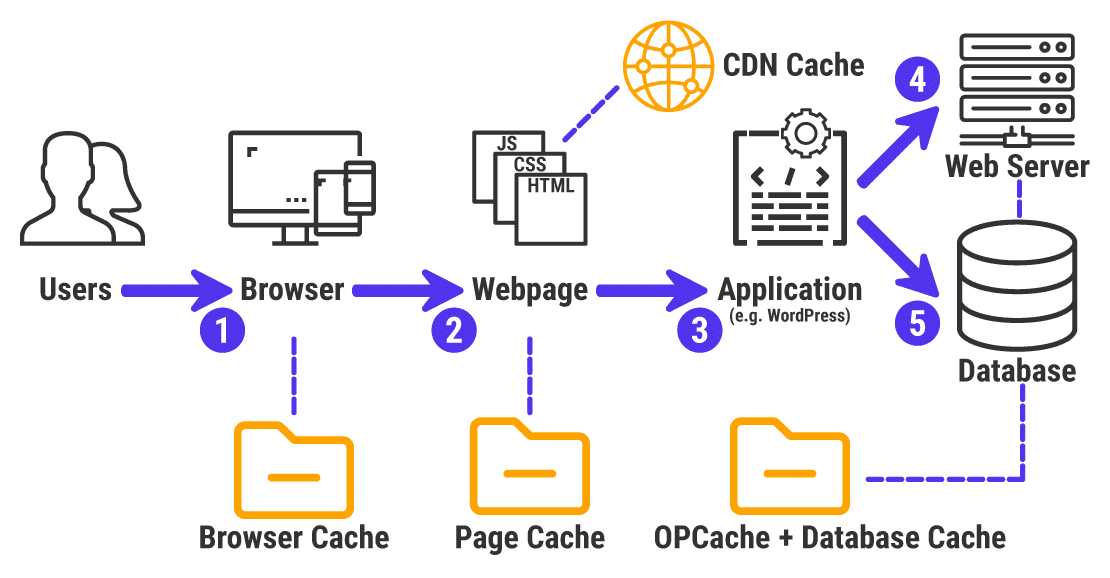

One of the easiest ways to greatly increase the performance of your application is to use caching. It is the procedure for momentarily storing data in a location with quicker access. Usually, you will cache the output of costly operations or data that is accessed frequently.

Through caching, requests for the same data can be fulfilled from the cache rather than having to be fetched from the original source.

ASP.NET Core offers several types of caches, such as IMemoryCache, IDistributedCache, and the upcoming HybridCache (.NET 9).

We will look at caching implementation in ASP.NET Core applications in this article.

How Applications Perform Better With Caching

Caching increases scalability and user experience while lowering latency and server load to improve the performance of your application.

- Faster data retrieval: Retrieving data from the source (such as a database or API) takes much longer than accessing data that has been cached. Memory (RAM) is usually used to store caches.

- Fewer database queries: Less database queries are required when frequently accessed data is cached. As a result, the database server is under less stress.

- Lower CPU usage: Processing API responses or rendering web pages can both demand a lot of CPU power. There is less need for repetitive, CPU-intensive tasks when the results are cached.

- Handling increased traffic: Caching enables your application to handle more concurrent users and requests by lessening the load on backend systems.

- Distributed caching: Redis and other distributed cache solutions allow you to scale the cache across several servers, which boosts resilience and performance even more.

Caching Abstractions in ASP.NET Core

There are two main abstractions offered by ASP.NET Core for working with caches:

IMemoryCache: data is kept in the web server’s memory. Not ideal for distributed scenarios, but easy to use.IDistributedCache: provides a more resilient option for applications that are distributed. It enables you to keep data that has been cached in a distributed cache such as Redis.

To use these services, we must register them with DI. The non-distributed in-memory implementation of IDistributedCache will be configured by AddDistributedMemoryCache.

builder.Services.AddMemoryCache();

builder.Services.AddDistributedMemoryCache();This is how the IMemoryCache is used. The cached value will be checked first, and if it is, it will be returned directly. If not, we have to retrieve the value from the database and store it in a cache in case there are more queries.

app.MapGet(

"products/{id}",

(int id, IMemoryCache cache, AppDbContext context) =>

{

if (!cache.TryGetValue(id, out Product product))

{

product = context.Products.Find(id);

var cacheEntryOptions = new MemoryCacheEntryOptions()

.SetAbsoluteExpiration(TimeSpan.FromMinutes(10))

.SetSlidingExpiration(TimeSpan.FromMinutes(2));

cache.Set(id, product, cacheEntryOptions);

}

return Results.Ok(product);

});Cache expiration is yet another crucial subject to talk about. Cache entries that have gone stale and are not being used should be removed. Configuring cache expiration is possible by passing in the MemoryCacheEntryOptions. For instance, we can adjust the SlidingExpiration and AbsoluteExpiration values to regulate the cache entry’s expiration date.

Cache-Aside Pattern

The most popular caching technique is the cache-aside pattern. This is how it operates:

- Check the cache: Check the cache for the requested data.

- Fetch from source (if cache miss): Get the data from the source if it’s not already in the cache.

- Update the cache: Keep the retrieved information in the cache for later queries.

The cache-aside pattern can be applied as an IDistributedCache extension method in the following manner:

public static class DistributedCacheExtensions

{

public static DistributedCacheEntryOptions DefaultExpiration => new()

{

AbsoluteExpirationRelativeToNow = TimeSpan.FromMinutes(2)

};

public static async Task<T> GetOrCreateAsync<T>(

this IDistributedCache cache,

string key,

Func<Task<T>> factory,

DistributedCacheEntryOptions? cacheOptions = null)

{

var cachedData = await cache.GetStringAsync(key);

if (cachedData is not null)

{

return JsonSerializer.Deserialize<T>(cachedData);

}

var data = await factory();

await cache.SetStringAsync(

key,

JsonSerializer.Serialize(data),

cacheOptions ?? DefaultExpiration);

return data;

}

}To handle serialization to and from a JSON string, we use JsonSerializer. To manage cache expiration, the DistributedCacheEntryOptions argument is also accepted by the SetStringAsync method.

We would apply this extension method as follows:

app.MapGet(

"products/{id}",

(int id, IDistributedCache cache, AppDbContext context) =>

{

var product = cache.GetOrCreateAsync($"products-{id}", async () =>

{

var productFromDb = await context.Products.FindAsync(id);

return productFromDb;

});

return Results.Ok(product);

});Pros and Cons of In-Memory Caching

Pros:

- Extremely fast

- Simple to implement

- No external dependencies

Cons:

- If the server restarts, the cached data is lost.

- Restricting it to a single server’s RAM

- Your application’s cache data is not shared among several instances.

Distributed Caching With Redis

A well-liked in-memory data store called Redis is frequently employed as a high-performance distributed cache. The StackExchange.Redis library can be used to incorporate Redis into your ASP.NET Core application.

However, there’s also the Microsoft.Extensions.Caching.StackExchangeRedis library, allowing you to integrate Redis with IDistributedCache.

Install-Package Microsoft.Extensions.Caching.StackExchangeRedis

Here’s how to set it up using DI by giving Redis a connection string:

string connectionString = builder.Configuration.GetConnectionString("Redis");

builder.Services.AddStackExchangeRedisCache(options =>

{

options.Configuration = connectionString;

});Registering an IConnectionMultiplexer as a service is an alternate strategy. After that, we’ll use it to give the ConnectionMultiplexerFactory a function.

string connectionString = builder.Configuration.GetConnectionString("Redis");

IConnectionMultiplexer connectionMultiplexer =

ConnectionMultiplexer.Connect(connectionString);

builder.Services.AddSingleton(connectionMultiplexer);

builder.Services.AddStackExchangeRedisCache(options =>

{

options.ConnectionMultiplexerFactory =

() => Task.FromResult(connectionMultiplexer);

});Now, when you inject IDistributedCache, it will use Redis under the hood.

Cache Stampede and HybridCache

A cache stampede may result from ASP.NET Core’s in-memory cache implementations being vulnerable to race conditions. When concurrent requests encounter a cache miss and attempt to retrieve the data from the source, it results in a cache stampede. This can cause your application to overload and defeat the purpose of caching.

The cache stampede issue can be resolved in part by locking, and.NET provides a multitude of locking and concurrency control options. The lock statement and the Semaphore (or SemaphoreSlim) class are the two locking primitives that are most frequently used.

public static class DistributedCacheExtensions

{

private static readonly SemaphoreSlim Semaphore = new SemaphoreSlim(1, 1);

// Arguments omitted for brevity

public static async Task<T> GetOrCreateAsync<T>(...)

{

// Fetch data from cache, and return if present

// Cache miss

try

{

await Semaphore.WaitAsync();

var data = await factory();

await cache.SetStringAsync(

key,

JsonSerializer.Serialize(data),

cacheOptions ?? DefaultExpiration);

}

finally

{

Semaphore.Release();

}

return data;

}

}Lock contention exists in the previous implementation because each request must wait for the semaphore. Using the key value as a basis for locking would be a far better solution.

HybridCache, a new caching abstraction introduced in.NET 9, seeks to address the drawbacks of IDistributedCache. See the documentation for the hybrid cache for more information.

Summary

One effective method for enhancing the performance of web applications is caching. Implementing different caching strategies is made simple by ASP.NET Core’s caching abstractions.

For in-memory caching, we have IDistributedCache and IDemoryCache to choose from.

Finally, a few rules to close out this week’s issue:

- Use

IMemoryCachefor simple, in-memory caching - Use the cache aside strategy to reduce database queries.

- Think of Redis as an implementation of a high-performance distributed cache.

- To distribute cached data among several applications, use

IDistributedCache.

Happy coding and we will back again with other interesting tutorial/article.

Javier is Content Specialist and also .NET developer. He writes helpful guides and articles, assist with other marketing and .NET community work